In a groundbreaking shift, AI ego therapy NYC is redefining mental health care in the city that never sleeps. Therapists across New York are now using cutting-edge artificial intelligence software to listen to therapy sessions—with full patient consent—and pinpoint ego-driven speech patterns. This technology promises to accelerate therapeutic progress by offering real-time insights into how self-centered thinking may hinder personal growth. As this innovative approach gains traction, it’s sparking conversations about the intersection of technology and emotional healing in one of the world’s busiest urban hubs.

The Rise of AI in NYC Therapy Rooms

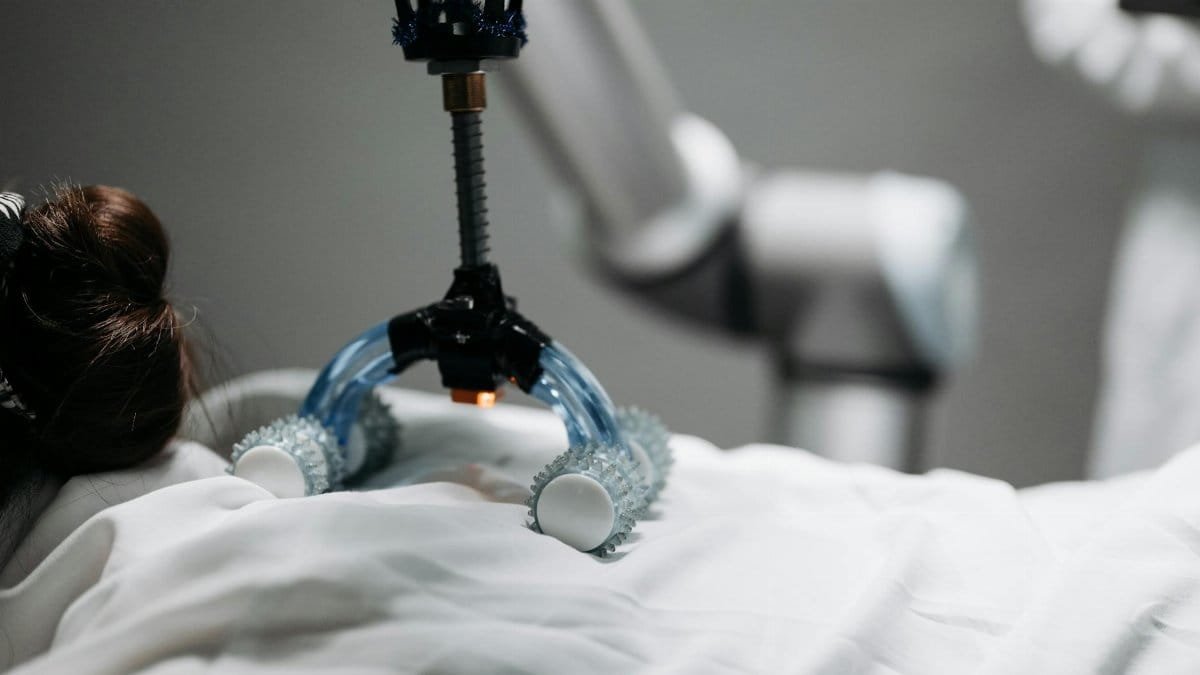

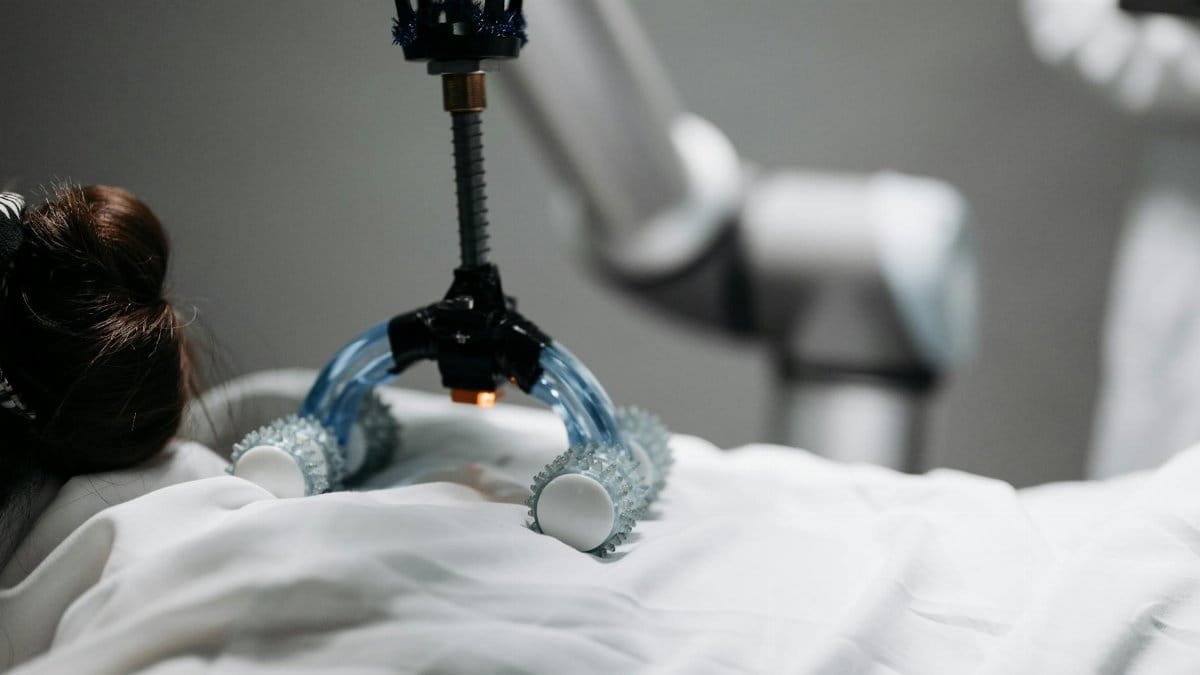

New York City, a hub for innovation, is seeing a quiet revolution in its therapy practices. Therapists are integrating AI tools designed to analyze conversations during sessions. These tools focus on detecting language that reflects ego-driven thoughts—patterns where self-absorption or defensiveness might dominate. By identifying these tendencies as they happen, the software provides therapists with data to guide patients toward healthier communication and self-awareness. This marks a significant departure from traditional methods, blending tech with human insight.

How AI Detects Ego-Driven Speech

The AI software used in AI ego therapy NYC operates by listening to the flow of dialogue in real time. It’s programmed to recognize specific linguistic cues—phrases or tones that suggest overemphasis on the self, such as excessive use of “I” statements or narratives centered on blame or superiority. Once flagged, these patterns are highlighted for the therapist, often through a discreet interface, allowing them to address the behavior during the session. This immediate feedback loop is what sets the technology apart from post-session analysis.

Consent at the Core of the Process

Privacy remains a top concern with any technology that records personal conversations. In NYC, therapists using this AI tool ensure that patients provide explicit consent before sessions are monitored. The process is transparent—clients are informed about how the software works, what data it collects, and how it’s used solely to enhance their therapeutic journey. This commitment to ethical standards is crucial in maintaining trust, especially in a field as sensitive as mental health care.

Accelerating Therapeutic Progress

The primary goal of AI ego therapy NYC is to speed up the therapeutic process. Traditional therapy often relies on a therapist’s observations over multiple sessions to identify recurring patterns. With AI, these insights are available instantly, enabling quicker interventions. For instance, if a patient repeatedly frames challenges in a self-centered way, the therapist can redirect the conversation sooner, fostering greater self-reflection. Early adopters of this tech in NYC report that it helps clients break through mental blocks more efficiently.

Bridging Technology and Human Connection

While the AI provides valuable data, therapists emphasize that it’s no replacement for human empathy. The software serves as a tool, not a decision-maker. NYC practitioners using this technology stress that their role remains central—interpreting the AI’s findings through the lens of their training and the unique needs of each patient. This balance ensures that therapy retains its personal touch, even as algorithms play a supporting role in uncovering hidden patterns of thought.

Implications for Mental Health in 2025

As mental health tech continues to evolve, the use of AI in therapy could become a standard practice across the U.S. In 2025, NYC is likely to remain at the forefront of this trend, given its concentration of forward-thinking professionals and tech-savvy clientele. The success of AI ego therapy NYC may inspire other cities to adopt similar tools, potentially reshaping how therapy is conducted nationwide. For now, the focus remains on refining the technology and ensuring it serves patients’ best interests.

Challenges and Criticisms of AI in Therapy

Despite its promise, integrating AI into therapy isn’t without hurdles. Some NYC therapists caution that over-reliance on technology could depersonalize the therapeutic experience. Others question the accuracy of AI in interpreting complex human emotions through speech alone, noting that context and non-verbal cues are often critical. Addressing these concerns will be key to ensuring that AI remains a helpful ally rather than a distraction in the deeply personal work of therapy.

Supporting Research and Resources

The integration of AI into mental health care aligns with broader trends in technology’s role in psychology. Studies from reputable institutions highlight the potential of AI to enhance therapeutic outcomes when used responsibly. For further reading on AI’s impact on mental health, explore resources from the National Institutes of Health, which often cover emerging health tech. Additionally, the American Psychological Association provides updates on ethical guidelines for technology in therapy, ensuring practitioners maintain high standards.